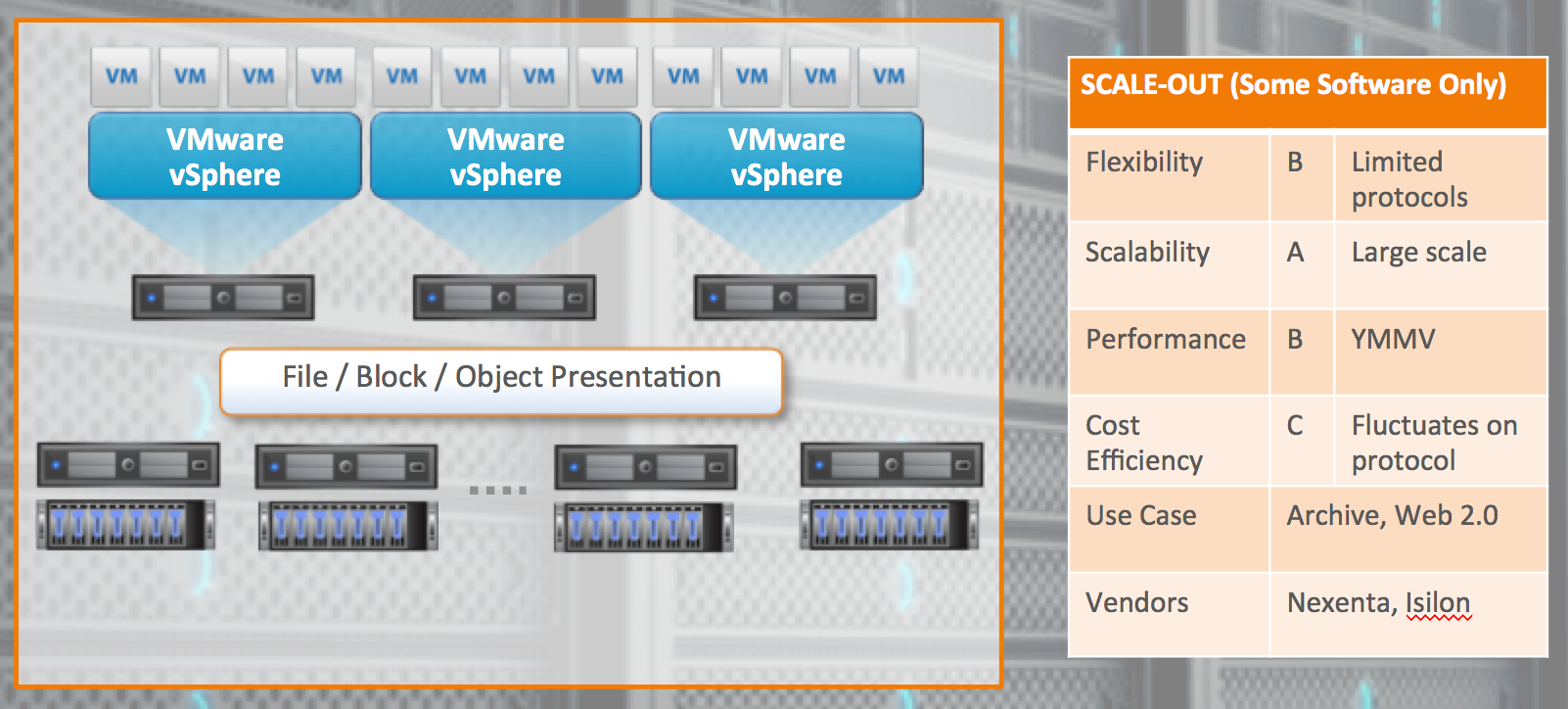

This is the third of six posts (the last one was Scale-up Software-Only “SDS”) where we’re going to cover some practical details that help raise your SDS IQ and enable you to select the SDS solution that will deliver Storage on Your Terms. The third SDS flavor in our series is Scale-out.

Scale-out is a fundamentally different approach from scale-up; with Scale-out, multiple head nodes can be attached over the network to dramatically increase scalability. This is a broad category, and solutions for it could be either vendor-defined / hardware based (think EMC’s Isilon), or software-only (Nexenta’s NexentaEdge); while we’d consider the software-only approach to be the SDS version, the technical benefits of either type of scale out are similar. You use low-latency networking to connect as many nodes as you want and form a cluster that provides storage services out to applications as unified name space.

The scale-out approach works well for Archive or Web 2.0 applications use cases. Scalability is top notch, because you can start small and grow just by adding nodes. While it provides the performance needed to handle huge capacities, there’s an important dependence on the network – the quality of your gear will significantly impact performance, because of the amount of communication between nodes; that may mean that your IOPS aren’t great

The flexibility of scale-out SDS is generally good but currently offers limited protocol support. Often the maturity of the platforms themselves limits your flexibility; for example, you can’t use Exchange to write to an object back end. Likewise, object-oriented applications won’t work with some back ends, either. Protocol support considerations also impact the cost effectiveness of Scale-out: they may restrict your hardware choices and lock you in to more expensive purchases.

Overall grade: B

See below for a typical build and the report card:

In this digital era we are using and creating more data than ever before. To put this into perspective, the last two years we created more data than all years before combined! Social media, mobile devices and smart devices combined in internet of things (IoT) accelerate the creation of data tremendous. We use applications to work with different kind of data sources and to help us streamline these workloads. We create valuable information with the available data by adding context to it. A few examples of data we use, store and manage are: documents, photos, movies, applications and with the uprise of virtualization and especially the Software Defined Data Center (SDDC) also complete infrastructures in code as a backend for the cloud. In the last two years we created more data worldwide, than all digital years before!

In this digital era we are using and creating more data than ever before. To put this into perspective, the last two years we created more data than all years before combined! Social media, mobile devices and smart devices combined in internet of things (IoT) accelerate the creation of data tremendous. We use applications to work with different kind of data sources and to help us streamline these workloads. We create valuable information with the available data by adding context to it. A few examples of data we use, store and manage are: documents, photos, movies, applications and with the uprise of virtualization and especially the Software Defined Data Center (SDDC) also complete infrastructures in code as a backend for the cloud. In the last two years we created more data worldwide, than all digital years before!