In the last blog we determined that the world has changed since the early 2000s and that x86 has won the server war, now giving you a chance to have vendors compete for you. But that’s only part of the data center — we still have to look at the rest of the infrastructure.

Networks that can shift and deliver at the same time

In the past, if you wanted to have the fastest network infrastructure you had to buy from one or two specific vendors. In reality, they still have a huge share of the networking market, but we’ve seen a transition in recent years.

That being said, it is not just about the software layer — with scale-out systems, the network has become critical for more than just inter-rack and inter-data center connectivity, and critical for processing power and speed as well. The move from 1GB to 10GB to 40GB, and even up to 100GB, is allowing for larger amounts of traffic than ever before to move around the globe. This is all done at a scale that can give even the smallest enterprise access to faster connectivity, but it still comes at a cost.

While 10GB is starting to become commonplace, it is still a much more expensive option than 1GB and not something that has trickled down to the end user yet. The true question is, does it need to? If the data center is driven by commodity hardware that is governed by software and all the compute happens there, do we really need to be faster at the endpoint? To answer that, we will have to wait and see.

The brains behind the brawn

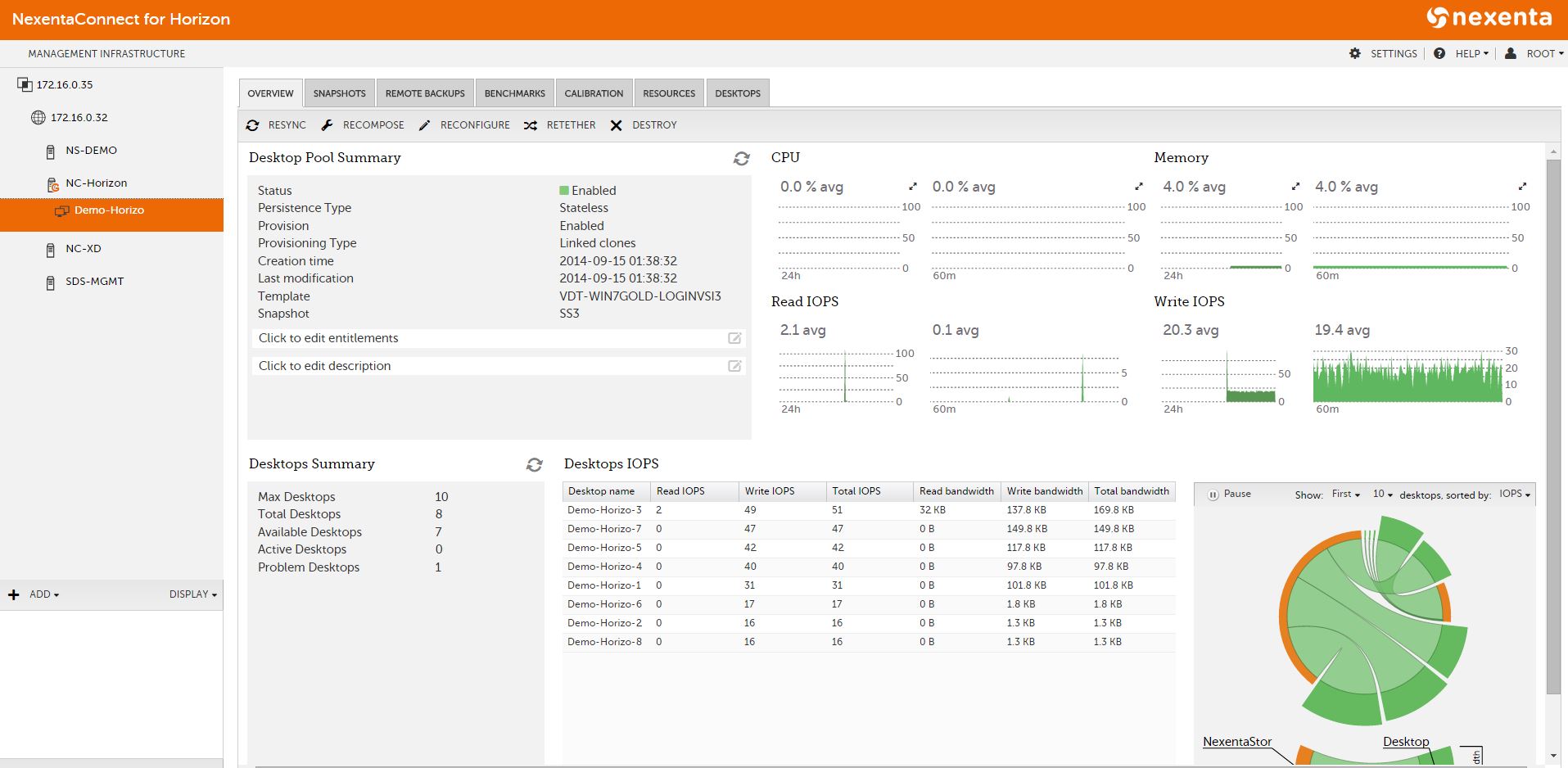

The No. 1 buzzword of the last few years has been “software-defined,” and rings true for the transition we have seen in the data center over the last 10 years. The first step is compute, with virtualization taking its place as a stalwart to provide the processing power needed on commodity hardware.

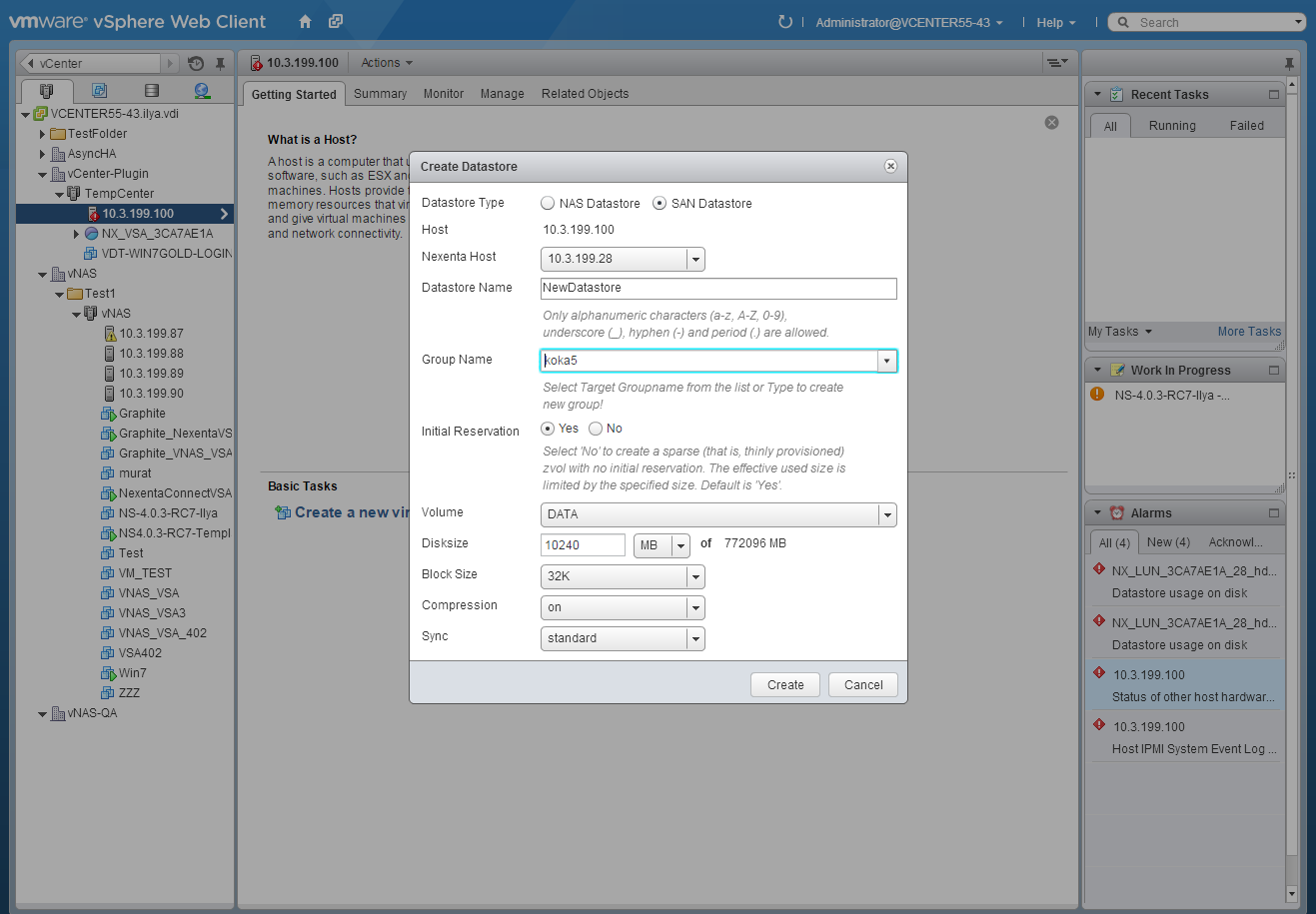

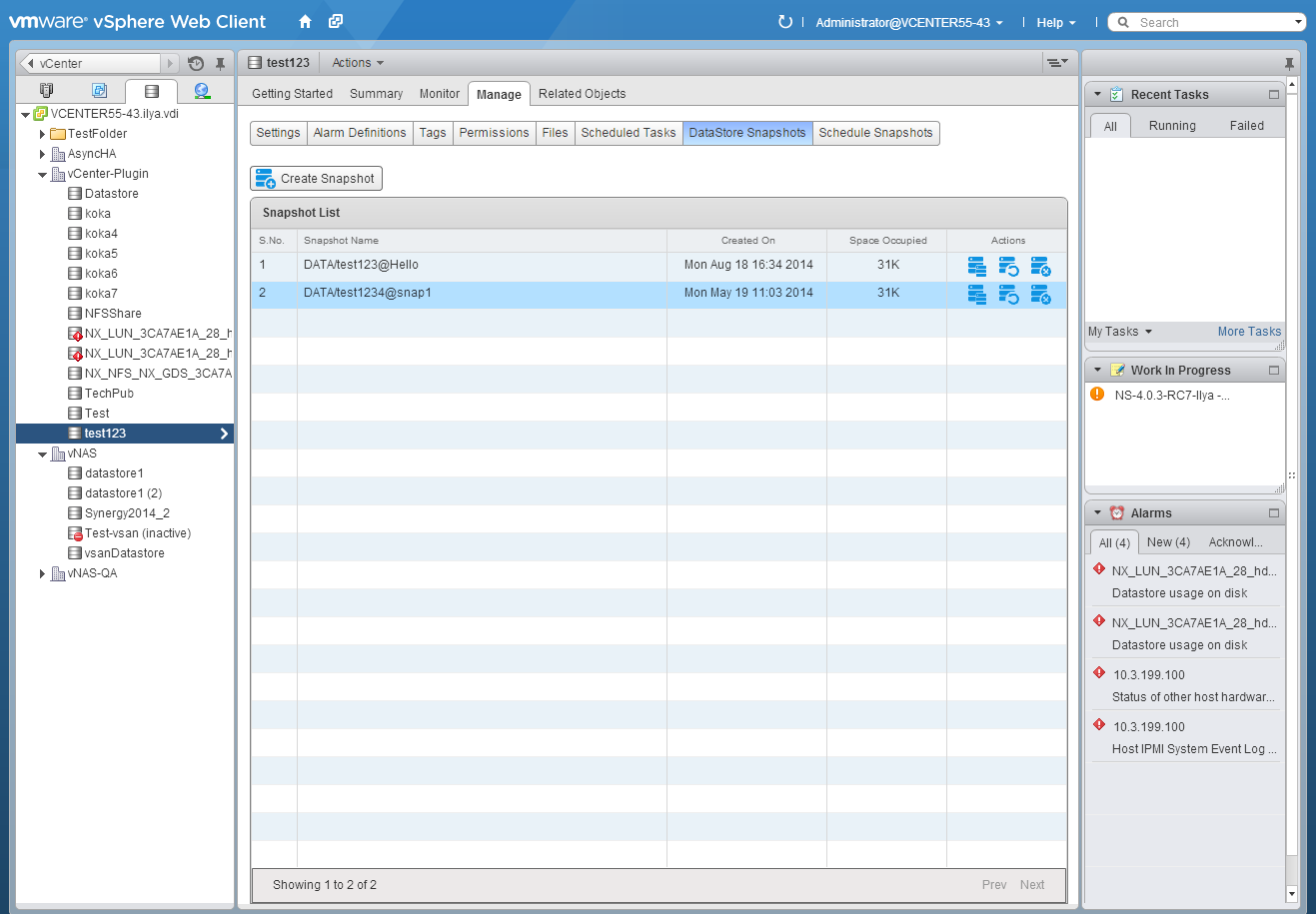

The next two parts that need to move to the software-defined space to allow for a commodity-driven data center are storage and networking. Some would argue that we are already there, with executives from major storage vendors making statements as early as 2010 that “we all run on commodity hardware.”

In reality, you are normally limited to the hardware that a vendor chooses. Moving into a software-defined model means we have choice in vendors and can truly liberate the storage needs from the hardware vendor’s grasp. The growth of open source-based solutions has helped this along the way, and we will see if 2016 is the year we take the leap to make software-defined the norm.

Networking, on the other hand, is the last step forward. You cannot buy a blank switch off the shelf and just layer on the software of your choosing and make it work at the speed and capacity that you desire, but we are starting to see a transition toward this. The combination of software-defined solutions is what gives companies the agility to transition to the enterprises of tomorrow, providing the ability to custom-fit a solution into the business instead of the other way around. It appears that software-defined is more than just a buzzword.

So is it the year?

My gut says the commodity-based data center is not ready to go mainstream, but for intrepid companies willing to source the right gear, it may be. We have seen so many changes in the data center since the ’90s and the early 2000s that I have to believe the pace of change will continue.

Before we cross into 2020, the data center will be in the domain of the business again, not the vendors. That will be the commodity data center that anyone who has managed a data center in the last 20 years has been dreaming about.